Abstract

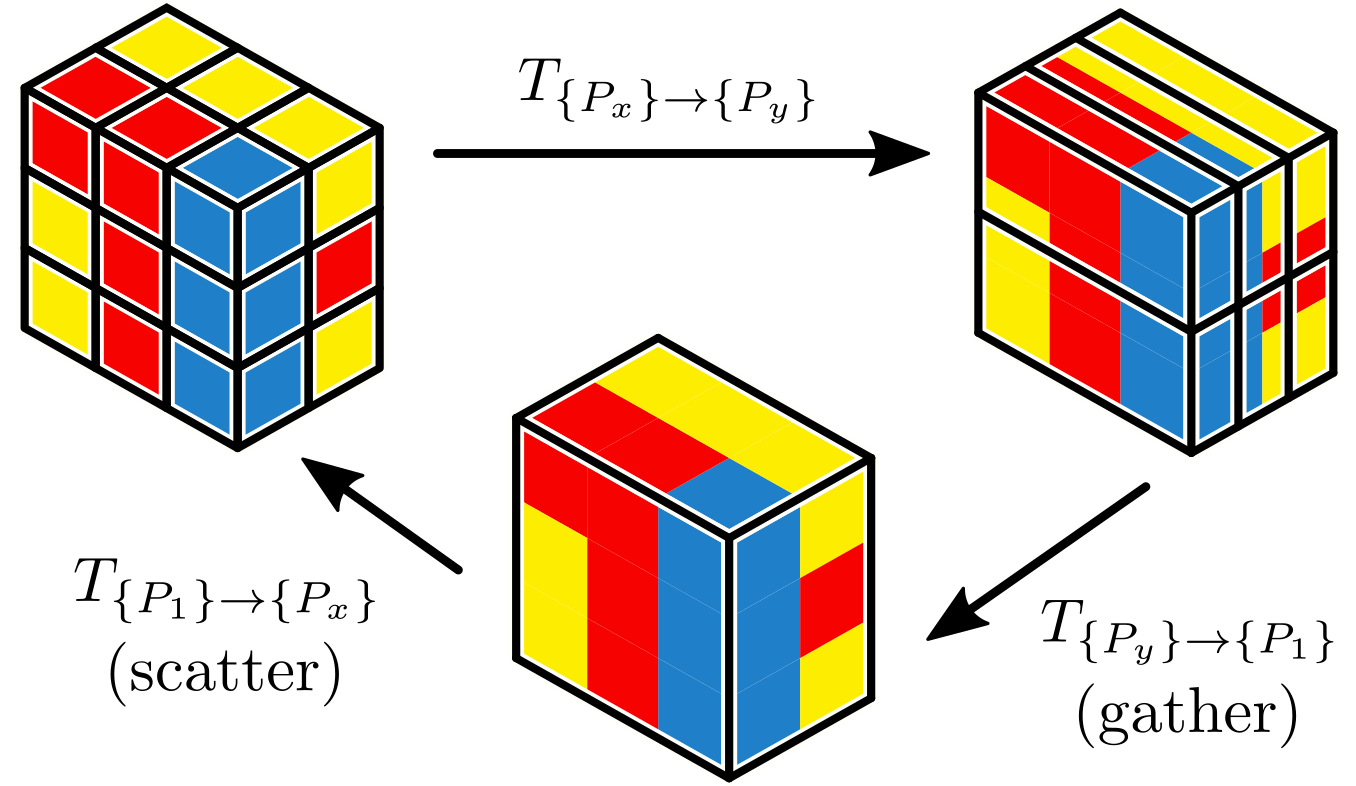

Training deep neural networks (DNN) in distributed computing environments is increasingly necessary, as DNNs grow in size and complexity. Local memory and processing limitations require robust data and model parallelism for crossing compute node boundaries. We propose a linear-algebraic approach to model parallelism in deep learning, which allows parallel distribution of any tensor in the DNN using traditional domain decomposition strategies. Rather than rely on automatic differentiation tools, which do not universally support distributed memory parallelism models, we show that classical parallel data movement operations are linear operators, and by defining the relevant spaces and inner products, we can manually develop the adjoint, or backward, operators required for gradient-based optimization. We extend these ideas to define a set of data movement primitives on distributed tensors, e.g., broadcast, sum-reduce, and halo exchange, which we use to build distributed neural network layers. We demonstrate the effectiveness of this approach by scaling ResNet and U-net examples over dozens of GPUs and thousands of CPUs, respectively.